Medical AI Models Rely on 'Shortcuts' That Could Lead to Misdiagnosis of COVID-19

|

By HospiMedica International staff writers Posted on 25 Jun 2021 |

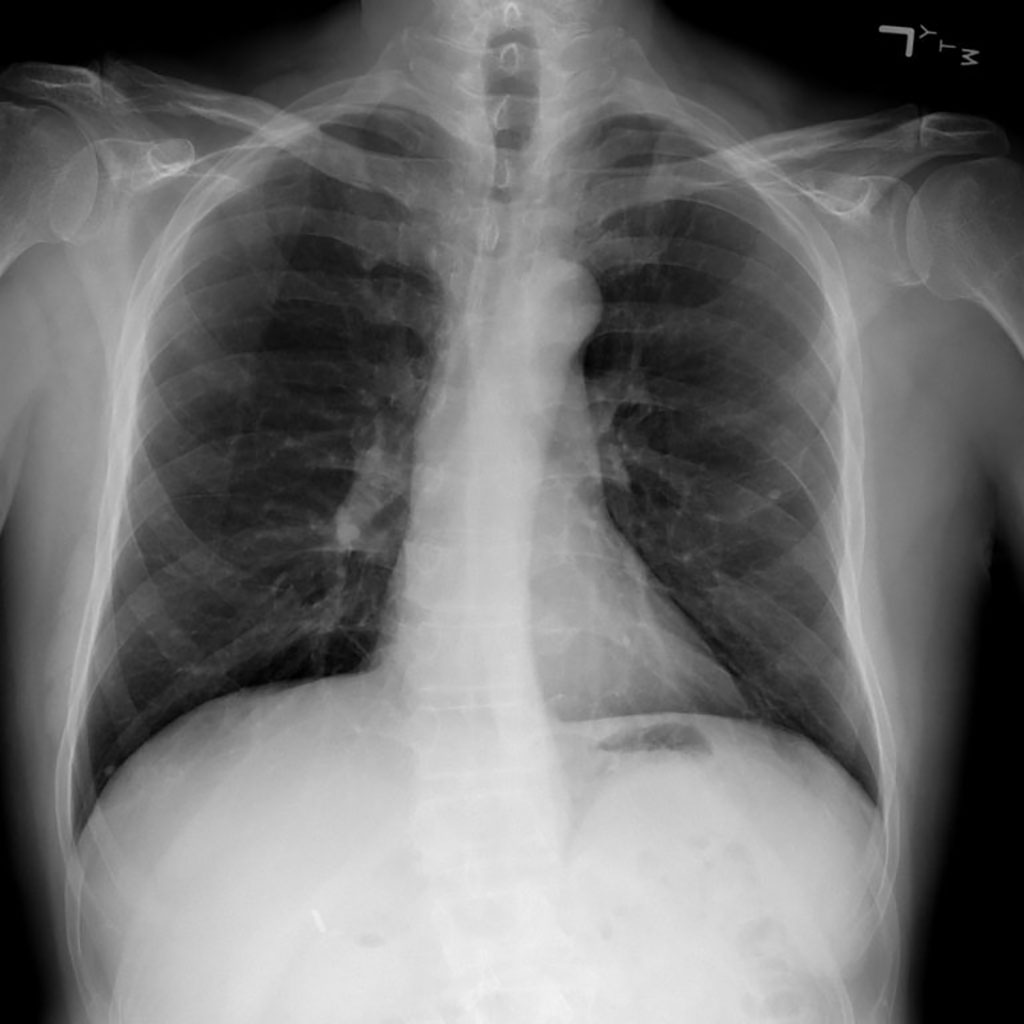

Image: Medical AI Models Rely on `Shortcuts` that Could Lead to Misdiagnosis of COVID-19 (Photo courtesy of National Institutes of Health Clinical Center)

Researchers have discovered that medical artificial intelligence (AI) models rely on “shortcuts” that could lead to misdiagnosis of COVID-19 and other diseases.

Researchers from Paul G. Allen School of Computer Science & Engineering at University of Washington (Seattle, WA, USA) have discovered, AI models - like humans - have a tendency to look for shortcuts. In the case of AI-assisted disease detection, such shortcuts could lead to diagnostic errors if deployed in clinical settings. AI promises to be a powerful tool for improving the speed and accuracy of medical decision-making to improve patient outcomes. From diagnosing disease, to personalizing treatment, to predicting complications from surgery, AI could become as integral to patient care in the future as imaging and laboratory tests are today.

However, when the researchers examined multiple models recently put forward as potential tools for accurately detecting COVID-19 from chest radiography (X-ray), they found that, rather than learning genuine medical pathology, these models rely instead on shortcut learning to draw spurious associations between medically irrelevant factors and disease status. In this case, the models ignored clinically significant indicators in favor of characteristics such as text markers or patient positioning that were specific to each dataset in predicting whether an individual had COVID-19. According to the researchers, shortcut learning is less robust than genuine medical pathology and usually means the model will not generalize well outside of the original setting. Combine that lack of robustness with the typical opacity of AI decision-making, and such a tool could go from potential life-saver to liability.

The lack of transparency is one of the factors that led the researchers to focus on explainable AI techniques for medicine and science. Most AI is regarded as a “black box” - the model is trained on massive data sets and spits out predictions without anyone really knowing precisely how the model came up with a given result. With explainable AI, researchers and practitioners are able to understand, in detail, how various inputs and their weights contributed to a model’s output. The team decided to use these same techniques to evaluate the trustworthiness of models that had recently been touted for what appeared to be their ability to accurately identify cases of COVID-19 from chest radiography.

Despite a number of published papers heralding the results, the researchers suspected that something else may be happening inside the black box that led to the models’ predictions. Specifically, they reasoned that such models would be prone to a condition known as worst-case confounding, owing to the paucity of training data available for such a new disease. Such a scenario increased the likelihood that the models would rely on shortcuts rather than learning the underlying pathology of the disease from the training data.

The team trained multiple deep convolutional neural networks on radiography images from a dataset that replicated the approach used in the published papers. They tested each model’s performance on an internal set of images from that initial dataset that had been withheld from the training data and on a second, external dataset meant to represent new hospital systems. The found that, while the models maintained their high performance when tested on images from the internal dataset, their accuracy was reduced by half on the second, external set — what the researchers referred to as a generalization gap and cited as strong evidence that confounding factors were responsible for the models’ predictive success on the initial dataset. The team then applied explainable AI techniques, including generative adversarial networks (GANs) and saliency maps, to identify which image features were most important in determining the models’ predictions.

When the researchers trained the models on the second dataset, which contained images drawn from a single region and was therefore presumed to be less prone to confounding, this turned out to not be the case; even those models exhibited a corresponding drop in performance when tested on external data. These results upend the conventional wisdom that confounding poses less of an issue when datasets are derived from similar sources — and reveal the extent to which so-called high-performance medical AI systems could exploit undesirable shortcuts rather than the desired signals. Despite the concerns raised by their findings, the researchers believe that it is unlikely that the models they studied have been deployed widely in the clinical setting. While there is evidence that at least one of the faulty models - COVID-Net - was deployed in multiple hospitals, it is unclear whether it was used for clinical purposes or solely for research. According to the team, researchers looking to apply AI to disease detection will need to revamp their approach before such models can be used to make actual treatment decisions for patients.

“A model that relies on shortcuts will often only work in the hospital in which it was developed, so when you take the system to a new hospital, it fails - and that failure can point doctors toward the wrong diagnosis and improper treatment,” explained graduate student and co-lead author Alex DeGrave. “A physician would generally expect a finding of COVID-19 from an X-ray to be based on specific patterns in the image that reflect disease processes. But rather than relying on those patterns, a system using shortcut learning might, for example, judge that someone is elderly and thus infer that they are more likely to have the disease because it is more common in older patients. The shortcut is not wrong per se, but the association is unexpected and not transparent. And that could lead to an inappropriate diagnosis.”

“Our findings point to the importance of applying explainable AI techniques to rigorously audit medical AI systems,” said co-lead author Joseph Janizek. “If you look at a handful of X-rays, the AI system might appear to behave well. Problems only become clear once you look at many images. Until we have methods to more efficiently audit these systems using a greater sample size, a more systematic application of explainable AI could help researchers avoid some of the pitfalls we identified with the COVID-19 models.”

Related Links:

Allen School

Researchers from Paul G. Allen School of Computer Science & Engineering at University of Washington (Seattle, WA, USA) have discovered, AI models - like humans - have a tendency to look for shortcuts. In the case of AI-assisted disease detection, such shortcuts could lead to diagnostic errors if deployed in clinical settings. AI promises to be a powerful tool for improving the speed and accuracy of medical decision-making to improve patient outcomes. From diagnosing disease, to personalizing treatment, to predicting complications from surgery, AI could become as integral to patient care in the future as imaging and laboratory tests are today.

However, when the researchers examined multiple models recently put forward as potential tools for accurately detecting COVID-19 from chest radiography (X-ray), they found that, rather than learning genuine medical pathology, these models rely instead on shortcut learning to draw spurious associations between medically irrelevant factors and disease status. In this case, the models ignored clinically significant indicators in favor of characteristics such as text markers or patient positioning that were specific to each dataset in predicting whether an individual had COVID-19. According to the researchers, shortcut learning is less robust than genuine medical pathology and usually means the model will not generalize well outside of the original setting. Combine that lack of robustness with the typical opacity of AI decision-making, and such a tool could go from potential life-saver to liability.

The lack of transparency is one of the factors that led the researchers to focus on explainable AI techniques for medicine and science. Most AI is regarded as a “black box” - the model is trained on massive data sets and spits out predictions without anyone really knowing precisely how the model came up with a given result. With explainable AI, researchers and practitioners are able to understand, in detail, how various inputs and their weights contributed to a model’s output. The team decided to use these same techniques to evaluate the trustworthiness of models that had recently been touted for what appeared to be their ability to accurately identify cases of COVID-19 from chest radiography.

Despite a number of published papers heralding the results, the researchers suspected that something else may be happening inside the black box that led to the models’ predictions. Specifically, they reasoned that such models would be prone to a condition known as worst-case confounding, owing to the paucity of training data available for such a new disease. Such a scenario increased the likelihood that the models would rely on shortcuts rather than learning the underlying pathology of the disease from the training data.

The team trained multiple deep convolutional neural networks on radiography images from a dataset that replicated the approach used in the published papers. They tested each model’s performance on an internal set of images from that initial dataset that had been withheld from the training data and on a second, external dataset meant to represent new hospital systems. The found that, while the models maintained their high performance when tested on images from the internal dataset, their accuracy was reduced by half on the second, external set — what the researchers referred to as a generalization gap and cited as strong evidence that confounding factors were responsible for the models’ predictive success on the initial dataset. The team then applied explainable AI techniques, including generative adversarial networks (GANs) and saliency maps, to identify which image features were most important in determining the models’ predictions.

When the researchers trained the models on the second dataset, which contained images drawn from a single region and was therefore presumed to be less prone to confounding, this turned out to not be the case; even those models exhibited a corresponding drop in performance when tested on external data. These results upend the conventional wisdom that confounding poses less of an issue when datasets are derived from similar sources — and reveal the extent to which so-called high-performance medical AI systems could exploit undesirable shortcuts rather than the desired signals. Despite the concerns raised by their findings, the researchers believe that it is unlikely that the models they studied have been deployed widely in the clinical setting. While there is evidence that at least one of the faulty models - COVID-Net - was deployed in multiple hospitals, it is unclear whether it was used for clinical purposes or solely for research. According to the team, researchers looking to apply AI to disease detection will need to revamp their approach before such models can be used to make actual treatment decisions for patients.

“A model that relies on shortcuts will often only work in the hospital in which it was developed, so when you take the system to a new hospital, it fails - and that failure can point doctors toward the wrong diagnosis and improper treatment,” explained graduate student and co-lead author Alex DeGrave. “A physician would generally expect a finding of COVID-19 from an X-ray to be based on specific patterns in the image that reflect disease processes. But rather than relying on those patterns, a system using shortcut learning might, for example, judge that someone is elderly and thus infer that they are more likely to have the disease because it is more common in older patients. The shortcut is not wrong per se, but the association is unexpected and not transparent. And that could lead to an inappropriate diagnosis.”

“Our findings point to the importance of applying explainable AI techniques to rigorously audit medical AI systems,” said co-lead author Joseph Janizek. “If you look at a handful of X-rays, the AI system might appear to behave well. Problems only become clear once you look at many images. Until we have methods to more efficiently audit these systems using a greater sample size, a more systematic application of explainable AI could help researchers avoid some of the pitfalls we identified with the COVID-19 models.”

Related Links:

Allen School

Latest COVID-19 News

- Low-Cost System Detects SARS-CoV-2 Virus in Hospital Air Using High-Tech Bubbles

- World's First Inhalable COVID-19 Vaccine Approved in China

- COVID-19 Vaccine Patch Fights SARS-CoV-2 Variants Better than Needles

- Blood Viscosity Testing Can Predict Risk of Death in Hospitalized COVID-19 Patients

- ‘Covid Computer’ Uses AI to Detect COVID-19 from Chest CT Scans

- MRI Lung-Imaging Technique Shows Cause of Long-COVID Symptoms

- Chest CT Scans of COVID-19 Patients Could Help Distinguish Between SARS-CoV-2 Variants

- Specialized MRI Detects Lung Abnormalities in Non-Hospitalized Long COVID Patients

- AI Algorithm Identifies Hospitalized Patients at Highest Risk of Dying From COVID-19

- Sweat Sensor Detects Key Biomarkers That Provide Early Warning of COVID-19 and Flu

- Study Assesses Impact of COVID-19 on Ventilation/Perfusion Scintigraphy

- CT Imaging Study Finds Vaccination Reduces Risk of COVID-19 Associated Pulmonary Embolism

- Third Day in Hospital a ‘Tipping Point’ in Severity of COVID-19 Pneumonia

- Longer Interval Between COVID-19 Vaccines Generates Up to Nine Times as Many Antibodies

- AI Model for Monitoring COVID-19 Predicts Mortality Within First 30 Days of Admission

- AI Predicts COVID Prognosis at Near-Expert Level Based Off CT Scans

Channels

Artificial Intelligence

view channelCritical Care

view channel

AI Tool Identifies Trauma Patients Requiring Blood Transfusions Before Reaching Hospital

Severe bleeding is one of the most common and preventable causes of death after traumatic injury. However, current tools often fail to accurately identify which patients urgently require blood transfusions,... Read more

New Clinical Guidelines to Reduce Central Line-Associated Bloodstream Infection

Central venous catheters are essential in intensive care units, delivering life-saving medications, monitoring cardiovascular function, and supporting blood purification. However, their widespread use... Read moreSurgical Techniques

view channelAI-Based OCT Image Analysis Identifies High-Risk Plaques in Coronary Arteries

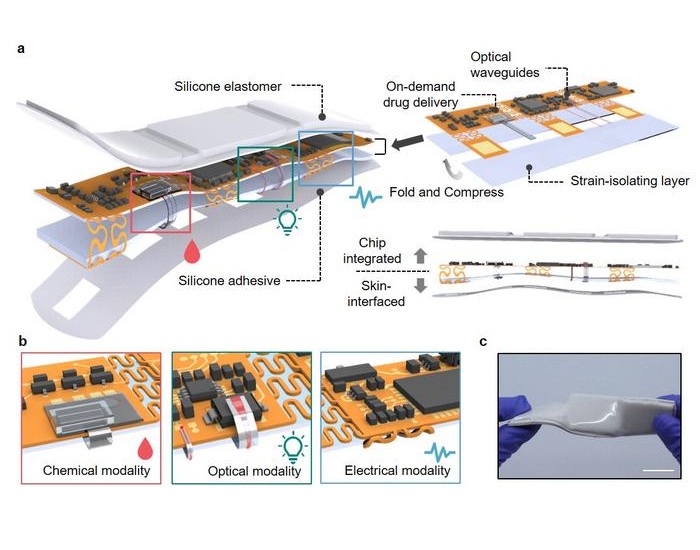

Lipid-rich plaques inside coronary arteries are strongly associated with heart attacks and other major cardiac events. While optical coherence tomography (OCT) provides detailed images of vessel structure... Read moreNeural Device Regrows Surrounding Skull After Brain Implantation

Placing electronic implants on the brain typically requires removing a portion of the skull, creating challenges for long-term access and safe closure. Current methods often involve temporarily replacing the skull or securing metal plates, which can lead to complications such as skin erosion and additional surgeries.... Read morePatient Care

view channel

Revolutionary Automatic IV-Line Flushing Device to Enhance Infusion Care

More than 80% of in-hospital patients receive intravenous (IV) therapy. Every dose of IV medicine delivered in a small volume (<250 mL) infusion bag should be followed by subsequent flushing to ensure... Read more

VR Training Tool Combats Contamination of Portable Medical Equipment

Healthcare-associated infections (HAIs) impact one in every 31 patients, cause nearly 100,000 deaths each year, and cost USD 28.4 billion in direct medical expenses. Notably, up to 75% of these infections... Read more

Portable Biosensor Platform to Reduce Hospital-Acquired Infections

Approximately 4 million patients in the European Union acquire healthcare-associated infections (HAIs) or nosocomial infections each year, with around 37,000 deaths directly resulting from these infections,... Read moreFirst-Of-Its-Kind Portable Germicidal Light Technology Disinfects High-Touch Clinical Surfaces in Seconds

Reducing healthcare-acquired infections (HAIs) remains a pressing issue within global healthcare systems. In the United States alone, 1.7 million patients contract HAIs annually, leading to approximately... Read moreHealth IT

view channel

EMR-Based Tool Predicts Graft Failure After Kidney Transplant

Kidney transplantation offers patients with end-stage kidney disease longer survival and better quality of life than dialysis, yet graft failure remains a major challenge. Although a successful transplant... Read more

Printable Molecule-Selective Nanoparticles Enable Mass Production of Wearable Biosensors

The future of medicine is likely to focus on the personalization of healthcare—understanding exactly what an individual requires and delivering the appropriate combination of nutrients, metabolites, and... Read moreBusiness

view channel

Medtronic to Acquire Coronary Artery Medtech Company CathWorks

Medtronic plc (Galway, Ireland) has announced that it will exercise its option to acquire CathWorks (Kfar Saba, Israel), a privately held medical device company, which aims to transform how coronary artery... Read more

Medtronic and Mindray Expand Strategic Partnership to Ambulatory Surgery Centers in the U.S.

Mindray North America and Medtronic have expanded their strategic partnership to bring integrated patient monitoring solutions to ambulatory surgery centers across the United States. The collaboration... Read more

FDA Clearance Expands Robotic Options for Minimally Invasive Heart Surgery

Cardiovascular disease remains the world’s leading cause of death, with nearly 18 million fatalities each year, and more than two million patients undergo open-heart surgery annually, most involving sternotomy.... Read more